2025-11-21

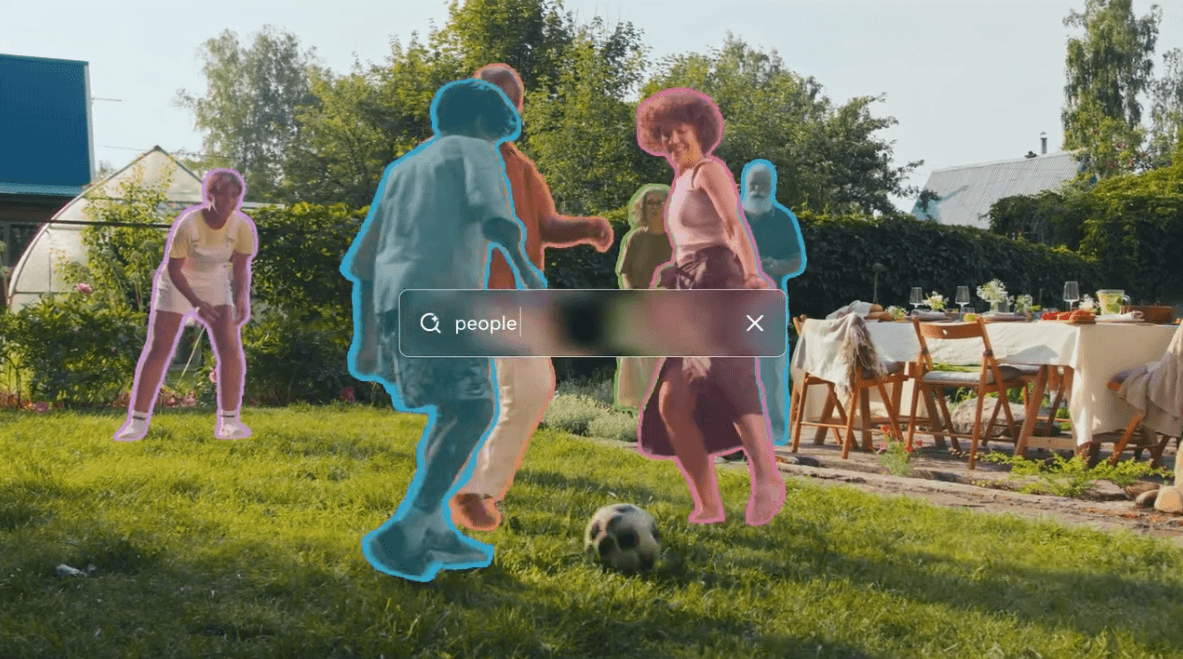

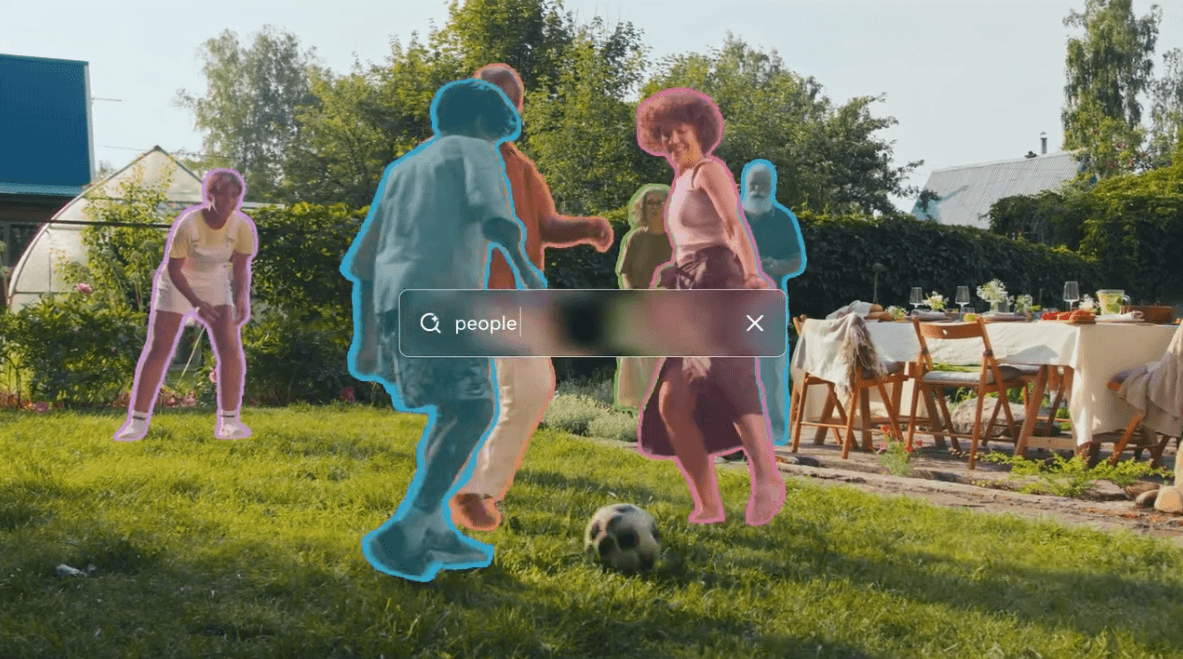

Meta's SAM 3 segmentation model blurs the boundary between language and vision

Meta releases the third generation of its "Segment Anything Model." Unlike standard models limited to fixed categories, SAM 3 uses an open vocabulary to understand both images and videos. The system relies on a new training method combining human and AI annotators.

The article Meta's SAM 3 segmentation model blurs the boundary between language and vision appeared first on Discover Copy

Rating

Innovation

Pricing

Technology

Usability

We have discovered similar tools to what you are looking for. Check out our suggestions for similar AI tools.

2025-10-31

How an Oregon court became the stage for a $115,000 showdown between Meta and Facebook creators

Some of the most successful creators on Facebook aren't names you'd ever recognize. In fact, many of their pages don't have a face or recognizable persona attached. Instead, they run pa [...]

2025-11-03

Apple Vision Pro M5 review: A better beta is still a beta

Everything new about the revamped Apple Vision Pro can fit in a single sentence: It has a far faster and more efficient M5 chip, it comes with a more comfortable Dual Knit Band and its display looks s [...]

2025-10-01

Ray-Ban Meta (2nd Gen) review: Smart glasses are finally getting useful

In a lot of ways, Meta's hasn't changed much with its second-gen Ray-Ban glasses. The latest model has the same design and largely the same specs as the originals, with two important upgrade [...]

2025-09-18

Everything Meta announced at Connect 2025: Second-gen Ray-Ban Meta, Oakley Meta Vanguard and Meta Ray-Ban Display

At Meta Connect 2025's kickoff event, Mark Zuckerberg unveiled a trio of new smart eyewear, including its first model with augmented reality. Meta's boss also announced the second generation [...]

2025-09-12

What to expect at Meta Connect 2025: 'Hypernova' smart glasses, AI and the metaverse

Meta Connect, the company's annual event dedicated to all things AR, VR, AI and the metaverse is just days away. And once again, it seems like it will be a big year for smart glasses and AI.<b [...]

2025-11-10

Meta returns to open source AI with Omnilingual ASR models that can transcribe 1,600+ languages natively

Meta has just released a new multilingual automatic speech recognition (ASR) system supporting 1,600+ languages — dwarfing OpenAI’s open source Whisper model, which supports just 99. Is architectu [...]